Hello my friends. My name is Bohdan. This is my True SEO School, the course SEO fundamentals, and we are approaching lesson number seven where we discuss SEO experiments. We already started to touch on the topic of SEO experiment previously, but let’s go deep with it.

Recap of lessons discussing Data Acquisition Funnel

But before we do so, let’s quickly catch up with what we’ve discussed previously in the lessons number four to six, which were grounded in the idea of the data acquisition funnel.

So what we learned in the later three lessons (from four to six) is a data acquisition funnel, characteristic of SEO, is quite long and successive, which means that the data acquisition starts from bots (not humans) that come to a page, which serves as a pivotal element in data acquisition, and it proceeds to the visible part where human start interacting with the elements of the page.

This type of combined funnel creates the idea of two different types of data:

- Technical or bots-related and

- Content or human-related, including clicks, impressions etc.

Notably, technical data might be quite weird to the ears and eyes of marketing professionals as they connect it to the engineering world.

Also, the SEO data, diverse as they are, might require interpreting because, apart from being technical and not technical, they hinge upon the URL and involve the idea of search query, which requires interpretation. And this interpretation must be done in line with a company business model – internally.

We also discussed that SEO optimization may take form on two levels:

- the technical level, which is mostly invisible to humans and means “tweaking” the code and

- the visible level, i.e. dealing with the quality of content.

And here comes to the stage the idea of experiment, because we haven’t got any requirements or direct instructions to fulfil. Apart from some smaller technical things, everywhere we go, we need experimenting.

And these experiments take two large forms:

- “Vote for this” or “Do you prefer, a customer, this or that option or a version of a page?”

- “Vote for us” or “Do you prefer to have us or a competitor on this spot in the results page?”, including signaling to a search engine that this position is more effective when it’s given to this enterprise than to a competitor.

These polls are going constantly and SEO takes an active part in staging these experiments. And this is how we came to the idea of the goal of SEO because the goal of SEO often stays on the back of our minds.

But what is the goal of SEO? Don’t we say the goal of SEO is to increase traffic? Now we go on and say the goal of SEO is to try to:

- arrive at a certain hypothesis coming from the analysis of data,

- assess the viability of this hypothesis,

- prepare conditions for testing hypotheses (like what data do we need? What type of experiments do we take?) and then, finally, disseminate the knowledge gained from these experiments and in the very end trying to

- contribute to the creation of stable infrastructure in order to make this process repetitive.

Characteristics of an SEO experiment

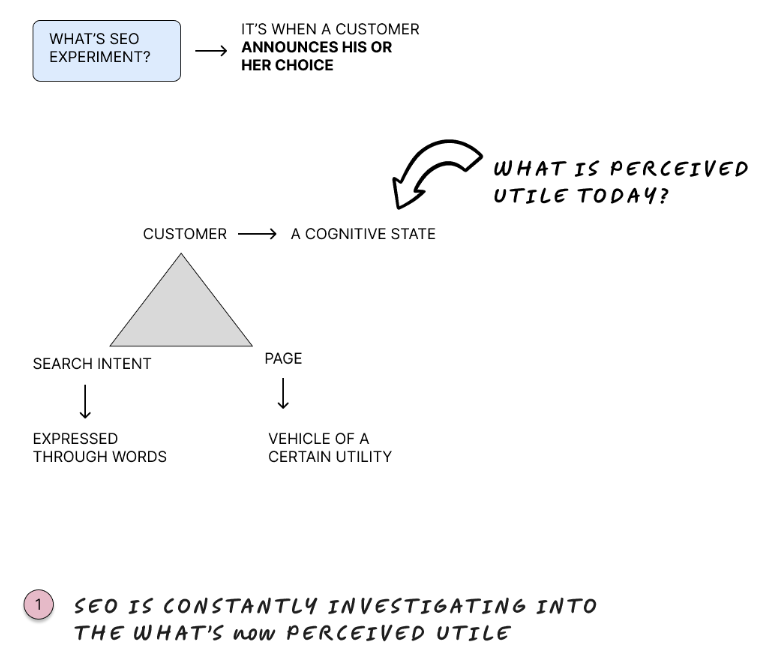

So what is an SEO experiment? It is when the customer announces his or her choice: what is perceived as utile (useful vis-a-vis) certain search intent? So, we’ve got a:

- customer,

- page that contains some icon or symbol of utility, and

- customer’s search intent or why exactly he or she came searching.

It takes this triangle to announce whether or not a vehicle of utility satisfies the adequate threshold of quality as regards to a particular search intent and motivation.

In this sense, a person who is doing an experiment is constantly investigating the actual state of a customer value, asking: What is perceived as valuable today, as of now?

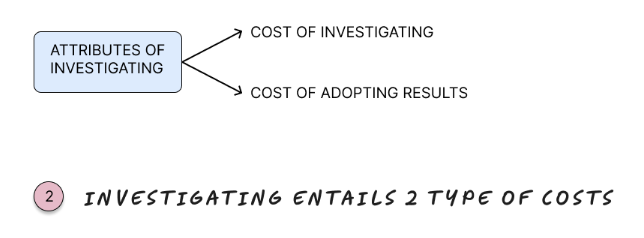

This investigating goes with two attributes:

- cost of investigating and

- cost of adoption of results.

Cost of investigating

So let’s discuss how I see how the cost of investigation might be seen.

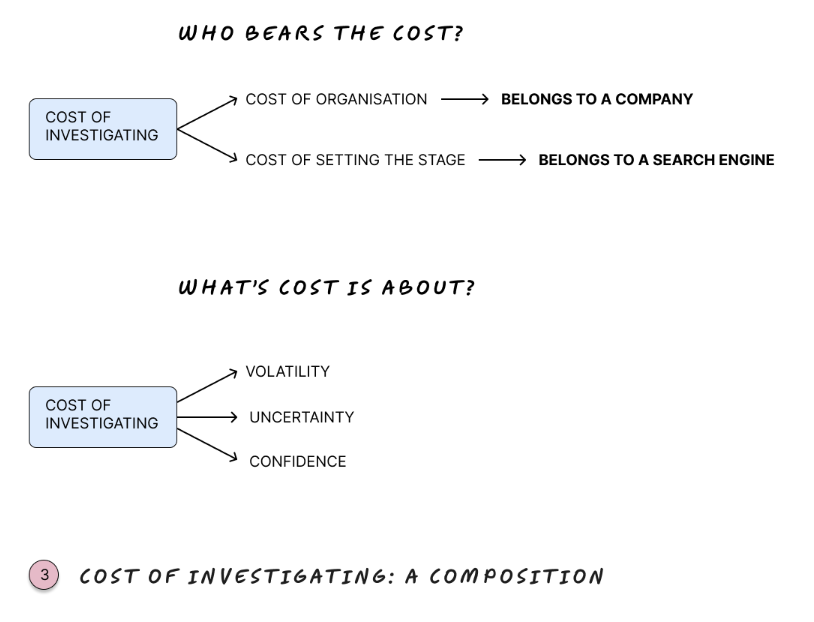

Whatever type of experiment goes on it has an internal cost, comprising:

- cost of set-up to frame this experiment (that lies on an enterprise) and also

- cost of stage (that lies on the part of a search engine in order to provide infrastructure resources) for customers to vote for a page.

So we have a metaphor of election between two alternatives: the state zero (the old one) and state one (the new ones). This election has been staged by a search engine, which provides architecture for this, and the options for which customer votes have been provided by an enterprise.

And if we go deeper into this, we’ll find that this cost of investigation, on a philosophical level, has three important limits:

- Volatility.

- Uncertainty (or stability of returns).

- Confidence level.

These also can be defined as costs.

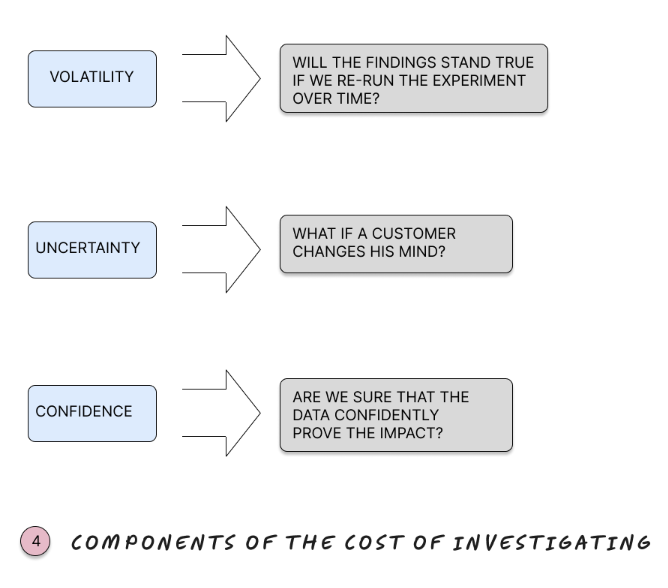

Volatility in time

Can we repeat it with the same outcome? Whether or not the result that we get will be held as true tomorrow. Even if we get some positive affirmation or impact, we’ve seen the connection between what we test and the results we get, it doesn’t actually mean that tomorrow we’ll have the same connection.

Uncertainty

Can we be sure that it’s not a mistake or a statistical outlier? Another cost is uncertainty, which is an attribute of experimenting. Before having any type of experiment, we didn’t know whether or not it’s even yield some results. So uncertainty spans both the immediate results of the experiment and also its future results. Every time we do it, we are not sure that it’s not even a misconception or mistake or it’s a statistical outlier.

Confidence

How much confidence is enough? Finally the third cost is a cost of confidence or let’s call it strengths of signal. We don’t know what kind of strengths of signal we must see to be able to say this is a successful experiment: if it’s too high it might be not probable, if it’s too low, then there can be noise.

Cost of adoption

And the second group is the cost of adoption. For example, even if we take a risk and go to experimenting with customer utility and customer votes for this or that option and we:

- despise the idea of it is not being certain, we say “okay, we’ve got proofs”,

- despise the idea of this strength of signal, we say “we are more or less confident”,

- despise the idea of uncertainty in timeframe of the effect, i.e. we don’t know how long this state of mind of the customer will last , and we say “okay, we are more or less fine with it”,

So then comes the cost of adoption.

For example, we did experiment on a limited number of pages, but in order to expand it over the website, we must incur significant costs, or, which is more common, is that we’ve introduced some changes, but we are not actually able to reproduce them consistently.

So we can do this once, but not forever because we don’t get the infrastructure, we don’t get the processes set up this way. This is how the SEO experiment goes a little too forward for the company itself, so we can have some positive affirmation, but the company cannot act on it. It’s a promise that is not yet kept.

Who controls the cost of investigating?

If we take these three elements of the cost of investigating:

- cost or volatility of results,

- the cost of confidence in the results, and what is called

- the cost of uncertainty in stability of returns

we may find that the distribution thereof belongs exclusively to the search engines and customers.

Likewise, search engines impact: 1) the cost of volatility and 2) the cost of confidence, whereas customers define: 3) the cost of certainty or stability of returns.

This is to say the cost of investigating depends on two external forces: a customer and a search engine. So in effect, this is the enterprise that bears the cost of investigation, but it is an external subject (customer and search engine) that has a control over the actual price of it.

How search engines control the cost of experiment?

The cost of volatility

So the search engine controls the cost of volatility by applying different weights to signals, i.e. through updating its algorithm. So if a search engine wants to rise a cost of uncertainty for you, the enterprise, they will put the new weights to the signals and ranking factors, and what you have calculated to be 100,000 euro (as an investment) in effect – through this update of Google algorithm, which is called the cost of volatility of signals – will be, for example, 180,000, so it’s 80% increase in the cost of uncertainty.

The cost of confidence

The cost of confidence once again, can be defined by search engines alone. For example, through the postponed effect of experiments.

You do experiments, you ain’t got no confidence in the result. You do it once, then again and over again. You don’t get the confidence you need. So effectively you are acting blind. And this is also what search engines can control. What it produces is that the search engine says: “we want to control the cost of this in order to defend from manipulations, to fight the manipulators”.

Businesses must have other ways to define what’s useful for customers

In the philosophical sense, it means that the search engine postulates that an enterprise doing search engine optimization cannot infer customer utility from these SEO experiments alone. There has to be other ways to learn on the customer utility except for SEO experiments. It’s a fundamental postulate or principle that we discover here.

So by the simple fact that a search engine controls two costs of experiments, it can make it intolerably high. That means that you want to discourage SEO from making too many experiments to arrive at customer utility. It says: “Hey, website owners, have other ways to define custom utility, except for running endless SEO experiments“.

How do customers control the cost of the experiment?

The customer controls the cost of stability of returns. This is something we cannot actually control. It’s beyond our control because customers may simply change their mind, influenced by lots of external things, and so forth.

The cost of experimenting: a strictly increasing function?

In the limits of it, this cost of investigating may end up to be a strictly increasing function, because the –

- information we get from the experiment is blurred and noisy,

- we get no confidence in it, and

- we get no guarantee as if we can have the future returns this same way. And finally,

- customers may change their minds.

So the experiments are increasingly costly. Which brings us to the important conclusion here that on the level of strategy, SEO has to be parsimonious, i.e. minimal. So the idea of SEO to get the data from as low a number of efforts as possible.

Summary

Let’s conclude this lesson by a small summary. So we’ve discussed that SEO uses experiment as the only way to learn on a custom utility. Except for this, SEO can have some technical guidance from Google in terms of optimization of obvious states. These can be done by strictly following the guidance, but in the majority of cases, this is the SEO experiment that we can actually get to know what is valuable for customers.

But at the same time, experiment bears two important implications versus the cost of investigation. We can investigate or probe into customer utility, but it comes with a cost that is controlled solely by the external subject, this is the customer himself (because he can change their mind later on) or search engine, a provider of the infrastructure of the of the experiment because it should be recalculate the signals or may postpone the effects within the experiment. So we cannot understand exactly what connects to what, what leads to what.

Another thing is that reflection of this cost of the investigation is a cost of adoption. Building this house on this shany ground will increase the cost of adoption of things we learn or get from the experiment and it’ll make the cost intolerable.

This leads us to the important notion that SEO strategy or SEO activity must be parsimonious. So it must be very picky in terms of what type of experiments to run, how to frame and set the experiments. In other words, how to save the company money by being able to arrive on important data that will shed light on a customer’s utility.

Postback from Lesson 20

Investigating refers to Customer feedback that is not directly accessible or can be ambiguous: scientific confidence in the experiment’s results. Cost of adoption of improvements refers to the constancy of purpose problem. To be parsimonious, optimization must use the “Go and observe” principle and be guided by the Voice of the Customer.

Leave a Reply